How long does PGGB take to remaster a CD album?

It depends on the length of the track on CDs but, if you have at least 32GB of RAM and a fast drive for virtual memory, you can expect 3x or higher rate. So a typical CD album may take 15 - 20 minutes to process.

If using quad precision (Insane) expect the above timings to increase 10 folds and if using octuple precision expect it to increase 30 folds

It looks like PGGB can process really fast, can it do this on streamed music?

PGGB is available in a SDK form for OEM, for that exact purpose. SDKs are available for Windows, Mac and Arch Linux.

The SDK is in the form of C++ header file and static libraries.

Developing a player from scratch that will both play local files and streaming audio is a huge undertaking, and also

doing it the right way (managing buffers, load balancing and keeping any processing noise to an absolute minimum etc.)

is even harder.

One option We considered is for PGGB to sit in between an existing player and an endpoint or like a plugin. The

problem with this approach is the latency will be unacceptable as PGGB in the middle will only have access to the stream

at the rate at which the host application sends the data. For this reason, the only way I would implement PGGB in real

time is if I had direct access to the full track or multiple tracks (this is possible even for streamed data as most

players will cache the tracks in advance).

The PGGB in SDK form uses a different framework for performance and is light weight and our tests indicate after just a

few seconds of startup delay, gap-less playback is possible.

What are 'Taps' and how many are too many?

In case if you are wondering, this is not the taps we are referring to.

The idea of taps and how many are too many is a complicated one.

The idea of reconstruction is that when an analog signal is digitized, part of the

information at any given moment of time is distributed into the signal going

forward in time, and going back in time.

We are not going to get into the why or how, but Prof. Shannon did a great job

laying out the theory of this.

This is the foundation of modern information theory. The notion of “taps” actually

used to be a physical signal tap based on this theory. You could extract information

out of a signal by having more taps to reconstruct the information from the signal.

They did this by “tapping” the wire, and feeding the signal back earlier in the

wire.

The reason some DACs such as Chord DACs use a sinc function for

reconstruction is that, that is what the Shannon Theorem says will give you an ideal

reconstruction - with an infinite number of taps, going forward in time and backward

in time, you can perfectly reconstruct the digital signal. The more taps (or bigger

the time window), the better the reconstruction. The more taps you have, the bigger

the

time window you need, i.e, the music track needs to be longer.

DACs like the Chord DAVE and upsamplers like the Hugo mScaler all have fixed

tap lengths and windows, because they are running on “live” data that is coming

through. mScaler, with 1M taps, requires about 1.5 seconds of audio signal to

calculate

the value for each moment in time. It basically multiplies 1M data points times

sin(x)/x and adds them up, and that is the signal. Think of it as desmearing or

bringing the true value into focus, leveraging the information that got smeared by

the digitization process.

PGGB is file/batch oriented so it has the advantage of being able to look at

as

much information as it available, i.e., it has the advantage

of looking at the whole track instead of just portions of a track. The question is,

how much should it look at?

The current default setup is to look at the length of the file, and select

the maximum number of taps that would work for that file. If you had more taps, it

wouldn’t give you any more information, because the infomations before the start of

the song is zero and the information after the end of the song is zero.

So why not combine all the tracks in an album into one really long song?

The issue is that each of those tracks were recorded and digitized

separately. If you are remastering track 2, there may be no information from track 2

that got smeared into track 3 or track 1. If you have taps that are longer than the

length of track 2, you may be basically adding garbage information into track 2

(information from track 1 and information from track 3 is being incorrectly used to

reconstruct what is in track 2)

There is a notable exception to this. If there is a continuous recording

that got artificially split into multiple tracks for convenience (as for example,

some classical movements, or even some live albums), because it was a continuous

recording then it would be valid to have information from a previous track and

future track used to reconstruct the current track…it was one long recording. For

these scenarios, PGGB has an option called combine.json. You designate the tracks

that should be processed as one large track, and it combines them in memory and

treats it as one long track for reconstruction.

Once you get your head wrapped around stuff from the future and stuff from

the

past impacting what something should sound like right now, the next obvious question

is, why are use using track boundaries? That is a great question. What if a song is

composed of many 30 second recordings that all got digitized and mixed together? Any

tap length more than 30 seconds would be using bad information.

What about mixing, where after digitization the levels of various segments

are made louder or softer, or put through digital processing like echo? Taps that

overlap those areas would have corrupted data for reconstruction, and you wouldn’t

get the full benefit of taps.

Note that the “smearing” that taps helps reverse happens when digitization

happens. For analog recordings where there was analog mixing, since it is one long

digitization step, using the full length of the track for taps makes great sense.

For live recordings, similarly it is typically one longer recording, so taps work

great. Heavily digitally produced and mixed albums will likely benefit less from

using more taps (but they will benefit from other aspects of PGGB such as noise

shaping).

Net net: there is definitely a point of diminishing returns on taps, even in

ideal situations.

There are MANY non-ideal situations in our recordings that will impact the accuracy

of taps being used for reconstruction.

If you have one logical recording that has been split into many tracks, use

combine.json to have it processed as one long recording.

How does PGGB choose tap length for each track?

PGGB will build a windowed filter with as many taps (sinc filter coefficients) as

there are samples in the upsampled track, up to a maximum (for example 1024M) as

specified in the GUI. The number of taps is thus determined by the track length,

while the Maximum Taps is just the limit of what your PC can handle, which is why it

is not under preference but is under DAC/System settings.

For PCM input material (including DXD) , for 705kHz output rate, every 12

minutes of track length gets you 512M taps. If you have DSD 64, the math changes a

bit. Every 3 minutes gets you 512M tracks and you hit 2B at 12 minutes. If you wish

to venture beyond 2B taps (if you have tracks that can achieve that), you would need

128GB of memory unless you do not mind waiting for a single track to take a whole

day!

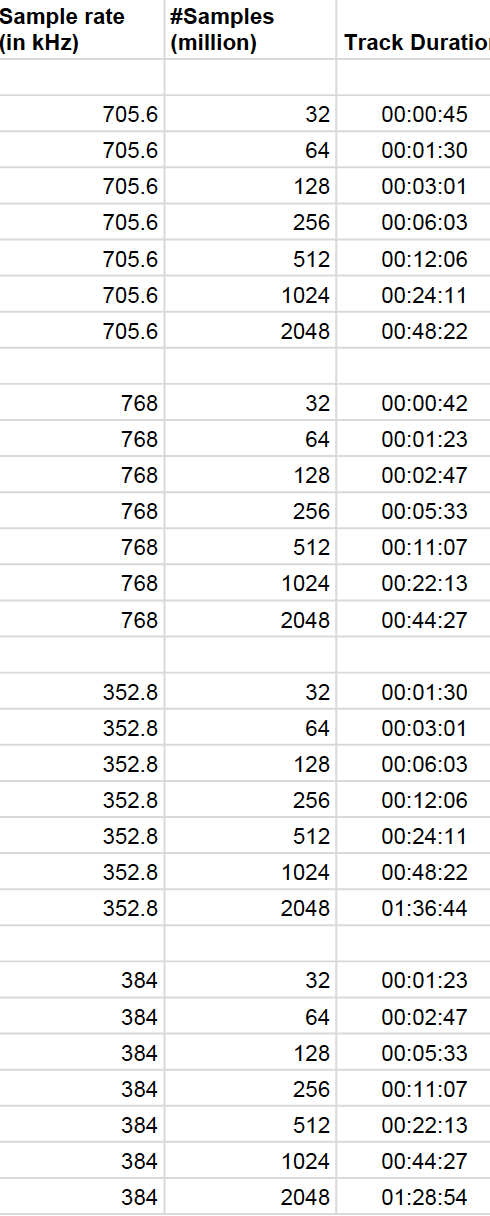

Here is a handy cheat sheet that tells you what track duration it takes to

achieve a certain number of taps, at different output sample rates.

The key takeaway is that there is no point to having a filter with # taps >

# samples. So PGGB effectively builds the longest filter possible for that track,

which is the best possible outcome. However, as #taps increases, so does the

processing cost in terms of memory and CPU, so for very, very long tracks, the

filter length is capped by the Maximum Taps setting, which is determined by

evaluating the amount of CPU and memory in the system.

Are linear phase filters better for upsampling?

Upsampling requires a low pass filter

( to interpolate

intermediate samples). The purpose of the filter

is to provide good estimates of the intermediate samples so that the upsampled

signal is smooth

and as close to what the original music signal would have been.

There are several ways to implement the low pass filter and each method has its pros

and cons. Linear phase filters maintain the phase relationship of the

original signal

and tend to preserve the timing of the original signal, resulting in improved

imaging precision.

Minimum phase filters when compared to linear-phase filters of the same length,

have better frequency domain performance that results in better tonal accuracy and a

denser presentation,

For the above reason, for short lengths, minimum phase have a

significant advantage over linear phase filters. With longer filter lengths, the

choice becomes

more subjective and comes down to preference and synergy with the playback chain.

With, the ultra-long windowed Sinc based linear filters such

as the one PGGB uses, it is possible to get very close

to the original, as if the music was recorded at a higher sample rate. As a result,

the ultra-long filters pull ahead

of other implementations in terms of realism and accuracy.

The ultra-long windowed Sinc based linear filters come with a significant cost in

terms of

latency and computational requirements, which make them hard to use for real-time

playback. However,

for offline upsampling and remastering, they are an excellent choice.

Why use noise shaping? Is it same as dither?

Both dither and noise shaping can be used to

reduce the audible effects of

quantization (i.e., digital noise). Quantization noise is introduced when

the remastered track that resides in memory

in 64-bit floating-point precision format,

but needs to be output at 32-bit integer precision or lower.

However they work in very different

ways.

Dither is the

process of adding an intentional small

random noise to randomize quantization noise. This serves to decorrelate the music

signal and quantization error, resulting

in a more natural sound. Dither does not reduce the quantization noise, it just

helps to mask it.

Noise

shaping

does not simply mask the quantization noise, but it instead pushes the noise out of

the audible range.

Well implemented noise shapers can almost completely eliminate quantization noise

from the audible range.

This helps preserve and reproduce even the smallest of changes accurately, resulting

in a clean

and almost analog like quality. While noise shaping provides better results, unlike

dithering, noise shaping can

only be used if the output sample rate is 8fS (DXD) or higher.

PGGB uses specially designed higher order noise shapers

that are optimized for output sample rates and bit depths and are designed to almost

completely

remove quantization noise from the audible range.

What is 'windowing' and why is it important?

PGGB uses Sinc function based filters for near perfect reconstruction

of remastered music.

A nice property of the Sinc function is that it results in a perfect low pass filter

too in the frequency domain. This would eliminate aliasing

and provide near perfect attenuation of out of band frequencies. In terms of sound

quality,

it would result in best transparency, depth and tonal accuracy.

However, Sinc functions are infinitely long and are not practical for real

world use. So the Sinc function needs to be truncated to

match the track length being remastered; that sounds easy enough, but there is a

catch.

Abruptly truncating the Sinc function would result in 'ripples'

in the frequency domain.

Now the filter is no longer a perfect brick wall filter and will not have good out

of band rejection and may not have a small enough

transition band (i.e, it is not very steep) and could result in aliasing.

To get around this problem, windowing

functions

are used to gradually reduce the Sinc coefficients to zero instead of abruptly

truncating them. Windowing reduces the 'ripples' in the frequency domain

and results in better out of band rejection and steeper filter.

Careful choice of windowing function can retain best of both worlds. I.e,

very good time domain accuracy by staying true to the Sinc function and

staying close to an ideal low pass filter in frequency domain.

PGGB does not use the standard windowing function. PGGB uses a custom.

carefully tuned and optimized proprietary window that has an

order of magnitude better time domain accuracy than standard windowing functions

while delivering as good or better frequency domain performance.

What is a 'HF Noise filter'?

HF noise filter refers to 'High frequency' noise filter.

Most Hi-res recordings (4fS or higher) contain a significant amount of

residual noise (beyond 30kHz) as a result of noise shapers used during analog to

digital

conversion process. There is no music information contained in this noise.

So the HF noise can be safely removed, and has a positive effect on sound quality.

PGGB offer a choice of three HF noise filters. However the HF noise filters

are not applied separately, instead they are integrated into the design of

resampling filters.

Just like adding more elements to a camera lense can reduce transparency,

using additional filters can reduce transparency. So PGGB uses a single optimized

filter that does both

reconstruction and HF noise filtering for the most transparent sound.

Does PGGB offer Apodizing filters, and why?

PGGB offers both apodizing and non-apodizing filters. Apodization in Greek means cutting off the foot. It has different technical meanings

depending on the application. In Signal processing, it just means using a windowing function to reduce ringing artifacts

due to the abrupt truncation at the beginning and end of a sample window. In digital-audio, the term has been used and also misused.

In digital-audio, more often than not 'apodizing' is used to mean it is a non-brick-wall filter which has reduced or no 'pre-ringing'.

It is implemented as a slow-roll off filter. PGGB's filters do not fall under this category.

The problem is getting hung-up on the terminology and forgetting what we are trying to achieve.

With apodizing filters used in PGGB, you should forget everything else you may find

online about apodizing filters. We used the term 'apodizing' because it is immediately understood to mean it has

something to do with improving CD audio. It is not a slow roll off filter, it is not minium-phase. It uses

a windowed sinc filter. We could call it something else, but we do not want to add to the list of myriad jargons.

It is apodizing in the frequency domain in the sense that it 'cut-off' aliasing artifacts introduced during CD creation and

in our humble opinion, it is the only meaningful thing one can do to alleviate if not reverse the damage done to CD audio.

Aliasing artifacts are often introduced

due to a poor downsampling process when creating CD quality audio. This may either

be due to the quality of filters used during downsampling or because of the

limitation of

analog to digital conversion process. As a result, aliasing is introduced

near the end of the audible spectrum, which in turn can result in a harsher treble

signature and reduced timing precision.

PGGB uses apodizing filters to significantly reduce, if not completely

eliminate the

above mentioned artifacts. Please note that this is applicable only for CD audio.

Hi-res

audio does not require apodizing.

Note again: PGGB uses linear filters, and it does not remove pre-ringing like a

few implementations

of apodizing filter do.

Will my DAC benefit from PGGB? Does it need to be PCM DAC?

A DAC need not be pure PCM to take advantage of PGGB, Instead, the DAC would need to

be able to accept higher rate PCM (preferably 16fS or more),

which both R2R DACs such as Holo Spring May, Denafrips Terminator+ and delta-sigma

DACs such as Chord DAVE and iFi DSD can do (this is not meant to be a complete list

of DACs, just examples).

The idea here is to provide the DAC with a highest rate remastered version

of the original music track

that the DAC would accept (for example, this could be 705.6kHz or 1411.2kHz etc) and

if these remastered track is as close to

a true recording made at those rates, then the DAC will likely benefit from these

remastered tracks as

a bulk of the heavy lifting has already been done. If the DAC does not do any

further processing (like R2R DAC in NOS mode)

or if the DAC is able to take advantage of the higher rate PCM input (such some

delta-sigma DACs in spite of doing some further processing)

it is likely to benefit even further.

There are DACs that accept up to DXD rates and they may internally resample

to much higher rates.

These DACs would benefit only to the extent to which they can take advantage of DXD

rates.

Please refer to 'Dacs and Players' section for

more information.

Note: If it is possible to keep the processing on your DAC to minimum,

it is better. Depending on your DAC, this may require your DAC to be in NOS

mode. If that is not an option,

turing off additional filtering. If you are forced to choose a filter, then

choose a linear filter for best synergy with PGGB.

Does PGGB support DSD upsampling?

PGGB supports converting all DSD rates to PCM, but it does not

currently support creating higher rate DSD.

The reason for not supporting DSD upsamplig is that it is quite challenging

to

achieve the same signal-to-noise ratio and time domain accuracy of higher rate PCM

using

single bit DSD even at very high rates such as DSD512 or DSD1024. This requires

designing high quality, stable higher order (perhaps seventh order or higher)

modulators.

Which is difficult, but perhaps not impossible to design.

We are currently researching this and we may implement it if there is a

significant interest for

DSD upsampling using PGGB. If you know of DAC(s) that can benefit more from

DSD upsampling (compared to PCM upsampling), please drop us an email.

What is 1fS, 2fS, ..., 32fS etc?

One useful concept to learn is the terminology of FS. This refers to 'Fundamental Sample rate'. 1fS refers to 44.1 or 48 kHz. Thus:

How big are PGGB remastered tracks?

As a rule of thumb, every 11 minute 25 seconds of a stereo track at 16fS and 32 bits

takes up about 4GB of space

(which is the maximum size for 32 bit wav files). So, on an average typical CDs that

are 40 - 45 minutes long will take about 16GB of space at 16fS 32 bits.

At 8fS 32 bits that is 8GB and at 8fS 24 bits it will be 6GB.

Remastered tracks that are bigger than 4GB will be split, but the metadata

is preserved and track numbering

is changed so that they will play sequentially and in a gap-less fashion.

Does PGGB support lossless compression?

PGGB supports FLAC for bit depths less than or equal to 24 bits and

sample rates less than 8fS.

PGGB also supports WavPack compression format for higher than 8fS rates or

bit depth higher than 24 bits. WavPack compression is also lossless like FLAC.

PGGB will transfer all tags and artwork to both FLAC and WavPack output files.

What sort of PC will I need for running PGGB?

PGGB consumes s a memory-intensive application — for necessary reasons.

Recommended system specification:

- At least 4 cores, 8 cores preferred

- 16GB RAM if processing Cds only and limiting to 512M taps

- For more flexibility, at least 32GB, but 64 GB recommended, and 128GB will reward you even more

- A fast NVMe SSD for input and output files, and paging file

- Recommend at least 100GB paging file (see guide for details)

- Custom Quiet PC

- Taiko Audio Extreme

- Quiet PC Serenity 8 Office (quietpc.com): Nanoxia Deep Silence 1 Anthracite Rev.B Ultimate Low Noise PC ATX Case. ASUS PRIME Z370-A II LGA1151 ATX Motherboard. Intel 9th Gen Core i7 9700K 3.6GHz 8C/8T 95W 12MB Coffee Lake Refresh CPU. Corsair DDR4 Vengeance LPX 64GB (4x16GB) Memory Kit. Scythe Ninja 5 Dual Fan High Performance Quiet CPU Cooler. Gelid GC-3 3.5g Extreme Performance Thermal Compound. Palit GeForce RTX 2070 8GB DUAL Turing Graphics Card. FSP Hyper M 700W Modular Quiet Power Supply. Quiet PC IEC C13 UK Mains Power Cord, 1.8m (Type G). 2 x Samsung 970 EVO 2TB Phoenix M.2 NVMe SSD (3500/2500)

- 2010 Mac Pro running Parallels: It was able to run PGGB with 6 cores and 32 GB of RAM but currently running 12 cores and 128 GB. Parallels was given at least 4 cores and at least 28 GB.

- Streacom FC10 Alpha fanless chassis, Asus Prime Z490-A mainboard, Intel i7-10700 LGA1200 2.9 GHz CPU, CORSAIR Vengeance 128GB (4 x 32GB) DDR4 SDRAM 2666, OPTANE H10 256GB SSD M.2 for Windows 10 Pro OS, SAMSUNG 970 EVO Plus SSD 2TB M.2 NVMe with V-NAND Technology, HDPLEX 400W HiFi DC-ATX power supply.

- iMac Pro (2017) — 3.2 GHz 8-Core, 32 GB, 1TB, Apple Bootcamp, Windows 10

- iMac, 48GB RAM, 3.3 GHz four core i5. Running Windows 10 Pro under Bootcamp with a shared drive set up

- The sample rate of the source track: The higher the rate, the more virtual memory is needed

- The length of the track: The longer the track, the more virtual memory is needed

This is why the most memory-intensive inputs are long tracks at high sample rates:

for example, a 30 minute DXD track. These tracks benefit the most from larger RAM

sizes.

PGGB parallelizes the upsampling processing, but the scaling is limited to the ratio

of output sample rate to input sample rate. For example, with DXD (8fS) input and

16fS output, PGGB can only run 2 parallel threads. For redbook, the ratio of 16fS to

1fS is 16, so PGGB can potentially run 16 parallel threads.

The actual amount of parallelism is dynamically calculated, as PGGB has

auto-scaling logic to control the amount of parallelism so that it will fit in the

available system resources (#cores, amount of RAM). PGGB has other mechanisms for

parallelism to speed up further, for tracks where memory is not a bottleneck.

Can you suggest a sample PC build for PGGB that is also quiet?

Sure we can:

- Be quiet! Pure Base 500, No PSU, ATX, White, Mid Tower Case . Caveat - It’s a nice quiet case, but nothing great to look at.

- Prime B460-Plus, Intel® B460 Chipset, LGA 1200, HDMI, ATX Motherboard

- Core™ i7-10700 8-Core 2.9 - 4.8GHz Turbo, LGA 1200, 65W TDP, Retail Processor

- 128GB Kit (4 x 32GB) HyperX FURY DDR4 2666MHz, CL16, Black, DIMM Memory. Caveat - can easily start with 64GB

- 750 GA, 80 PLUS Gold 750W, ECO Mode, Fully Modular, ATX Power Supply

- Be quiet! Dark Rock 4, 160mm Height, 200W TDP, Copper/Aluminum CPU Cooler

- 2TB 970 EVO Plus 2280, 3500 / 3300 MB/s, V-NAND 3-bit MLC, NVMe, M.2 SSD

Can I use the Taiko Audio Extreme to run PGGB application?

As extreme as the Taiko Extreme is, it is not quite extreme enough out of the box to

run PGGB. The Extreme has a stripped down installation and memory configuration that

is optimized for SQ during playback. PGGB consumes all available system resources

(and then some). The good news is that you can install PGGB on the Taiko Extreme and

run it when you are not listening to music and not impact SQ.

To get PGGB to run on the Extreme, you must configure a paging file when

upsampling. By default, the Extreme has the paging file disabled to maximize sound

quality. To run PGGB, you will be turning on a paging file, running PGGB, then

disabling the paging file and rebooting, then listening to music on your Extreme as

you normally do (for convenience, you can listen to music with the paging file on,

but there may be some SQ impact).

To turn on the paging file on your Extreme, from the Start menu, follow the

following path:

Start -> Windows System -> Control Panel -> System -> Advanced System Settings ->

Advanced -> Performance Settings -> Advanced -> Virtual Memory Change

Select the drive on which you want to put the paging file (C: drive

usually), and select “custom size.” Set to 135000 for both min and max. Click OK and

you’re paging file is ready to go.

When you turn on or increase the size of the paging file, you do not need to reboot.

If you shrink it or disable it, you will need to reboot for the change to take

effect.

Note that the default configuration of the Extreme has the paging file disabled,

because it could impact SQ. For best sound quality, turn on your paging file, run

PGGB, then turn it off and reboot before listening to music.

If you’re doing a lot of PGGB work, give a listen to playback with and

without the paging file to decide if the inconvenience of rebooting your system

prior to music playback is worthwhile for you.

At 48GB, the Taiko Extreme is at the minimum amount of memory that you need to run

PGGB. Typically it does OK, but upsampling DXD files or processing DSD files pushing

the system to its limits. If you’re processing overnight, you won’t notice, but

enjoy a cup a coffee if you’re sitting there waiting for these more intensive

processing jobs to run.

If I had both PCM and DSD versions of an Album, which do I choose to remaster?

Regarding DSD… there are so many factors that come into play: original recording format, original editing and mixing format, your DAC, etc., etc. Ultimately, it all boils down to a couple of key considerations:

- Is the DSD album the best-sounding version in your library? If so, just upsample with PGGB - it will uplift SQ.

- If you also own PCM versions of the album, or are aware of other PCM versions, by all means explore upsampling your best-sounding PCM version with PGGB, and then choose the best-sounding upsampled versions.

Example 1:

Mahler 2nd, Gilbert Kaplan, Vienna Philharmonic, DG hybrid SACD. I don’t know the details of the recording, editing, and mastering format. Played natively to my DAVE in DSD+ mode, the DSD layer sounds best, with the CD layer played in PCM+ mode second best. I upsampled both versions to 32/705.6 with PGGB, played back natively on DAVE in PCM+ mode (of course), and found the following to hold:Example 2:a) the upsampled DSD version still sounds better than the upsample CD version

b) the gap between the 2 versions shrunk. In other words, PGGB did more good to the PCM version, but the improvement to the DSD version was still extraordinary.

Das Lied von der Erde, MTT, SF Symphony, recorded in DSD64, but unclear what format it was edited and mixed in. I suspect it was PCM, but not sure. I own 3 versions: DSD and CD from the hybrid SACD, and 24/96 hi-res version purchased from Qobuz. Played natively on my DAVE, I find the 24/96 (2fS) version to sound best, with the DSD version 2nd best. After upsampling with PGGB:Bottom line in all of this is:a) The upsampled 24/96 version reigns supreme.

b) The gap between the upsampled 24/96 and upsampled DSD is greater than the native gap. This again suggests PGGB’s ability to impart magic is most with PCM, but DSD is also uplifted.

Pick the best sounding version of an album in your library (irrespective of PCM or DSD). Where it’s close or equal, always prefer the PCM version.

Does PGGB support multi-channel music?

PGGB currently does not support multi-channel PCM or DSD, but it is relatively easy to extend it to support multi-channel. If there is modest demand for it, or a requirement for a commercial application,we are open to offering multi-channel support. Just drop us an email.